tgoop.com/Machine_learn/3390

Last Update:

Detecting Backdoor Samples in Contrastive Language Image Pretraining

3 Feb 2025 · Hanxun Huang, Sarah Erfani, Yige Li, Xingjun Ma, James Bailey ·

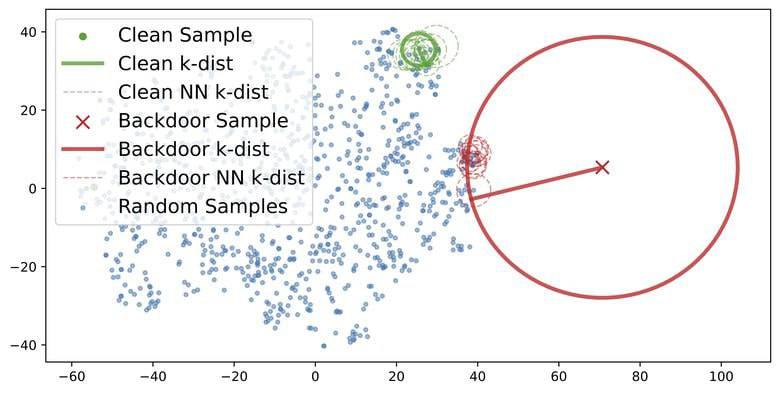

Contrastive language-image pretraining (CLIP) has been found to be vulnerable to poisoning backdoor attacks where the adversary can achieve an almost perfect attack success rate on CLIP models by poisoning only 0.01\% of the training dataset. This raises security concerns on the current practice of pretraining large-scale models on unscrutinized web data using CLIP. In this work, we analyze the representations of backdoor-poisoned samples learned by CLIP models and find that they exhibit unique characteristics in their local subspace, i.e., their local neighborhoods are far more sparse than that of clean samples. Based on this finding, we conduct a systematic study on detecting CLIP backdoor attacks and show that these attacks can be easily and efficiently detected by traditional density ratio-based local outlier detectors, whereas existing backdoor sample detection methods fail. Our experiments also reveal that an unintentional backdoor already exists in the original CC3M dataset and has been trained into a popular open-source model released by OpenCLIP. Based on our detector, one can clean up a million-scale web dataset (e.g., CC3M) efficiently within 15 minutes using 4 Nvidia A100 GPUs.

Paper: https://arxiv.org/pdf/2502.01385v1.pdf

Code: https://github.com/HanxunH/Detect-CLIP-Backdoor-Samples

Datasets: Conceptual Captions CC12M RedCaps

@Machine_learn

BY Machine learning books and papers

Share with your friend now:

tgoop.com/Machine_learn/3390